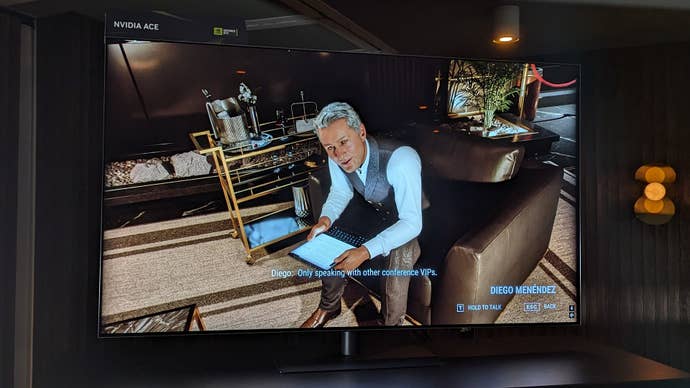

The conversation started, as most of mine do, awkwardly.

But hecouldrecommend the hotel bar, so I asked in turn about their best cocktail.

This was a mistake.

But it also… worked?

As a game interaction, our question and answer session progressed perfectly logically.

or “Im not sure what you mean” hiccups.

So yes, it was neat.

Not that the masquerade was particularly well-maintained elsewhere.

All the classic text-to-speech tells, basically.

Then theres the writing.

No sparkle, no playfulness, no real weight to the words.

And its attempt at a grizzled private dick-speak voiceover amounted to a tragically bland “A bar.

Could go for an Old Fashioned right about now.

But focus, Marcus, focus.

“Nobody Wants to Die, it aint.

Thing is, game developers apparently arent keen on waiting.

Besides Ubisoft, Im told multiple companies have already approached Nvidia about using ACE to build their NPC casts.

I dont say that as some blanket AI disliker, either.

Upscaling darlingDLSS, to give the obvious example, or the instant visual upgrades ofRay Reconstruction.

Despite producing mods of varying quality,RTX Remixhas been a net good so far as well.

And even when it does, will enough players actually want to hear what AI voices have to say?

Even ACEs own cogs and gears dont suggest otherwise.

Tae Hyuns mood and speech may be AI-generated, but they have to be generatedfromsomething.

And all of it was crafted from scratch not by AI, but by a human writer.